Building a Real-Time LED Classifier on ESP32 with Computer Vision

How I implemented on-device computer vision on an ESP32-S3 microcontroller using HSV color filtering for real-time LED detection and classification.

Introduction

Embedded computer vision is often associated with powerful single-board computers like Raspberry Pi or NVIDIA Jetson. But what if you could implement real-time image classification on a $10 microcontroller with just 512KB of RAM?

In this project, I built a complete computer vision pipeline that runs entirely on an ESP32-S3 microcontroller. The system captures live camera frames, processes them on-device using HSV color filtering, and classifies colored LEDs (Red, Green, Blue) in real-time—all while controlling physical LEDs to provide immediate feedback.

No cloud processing. No external compute. Just efficient embedded systems engineering.

Table of Contents

- The Challenge

- System Architecture

- The HSV Color Space Advantage

- Implementation Details

- Experimentation and Tuning

- Real-Time Performance

- Conclusion

The Challenge

Computer vision on the ESP32-S3 means working with just ~512KB SRAM, no GPU, and pure CPU processing. In embedded environments, optimization isn't optional—it's mandatory.

My first instinct was to use OpenCV for C++, but it doesn't work on the ESP32—the library's dependencies and memory footprint far exceed what the microcontroller can handle. Machine learning models were also out of the question due to model weights, inference engines, and memory consumption.

The solution: HSV color filtering—a lightweight classical computer vision technique that requires no model weights, uses simple arithmetic operations, and provides deterministic performance. The goal was to build a system that captures frames, processes them on-device, classifies LEDs (Red, Green, Blue), controls output LEDs, and achieves 5-10 FPS—all on a $10 microcontroller.

System Architecture

System architecture showing data flow from camera to LED output

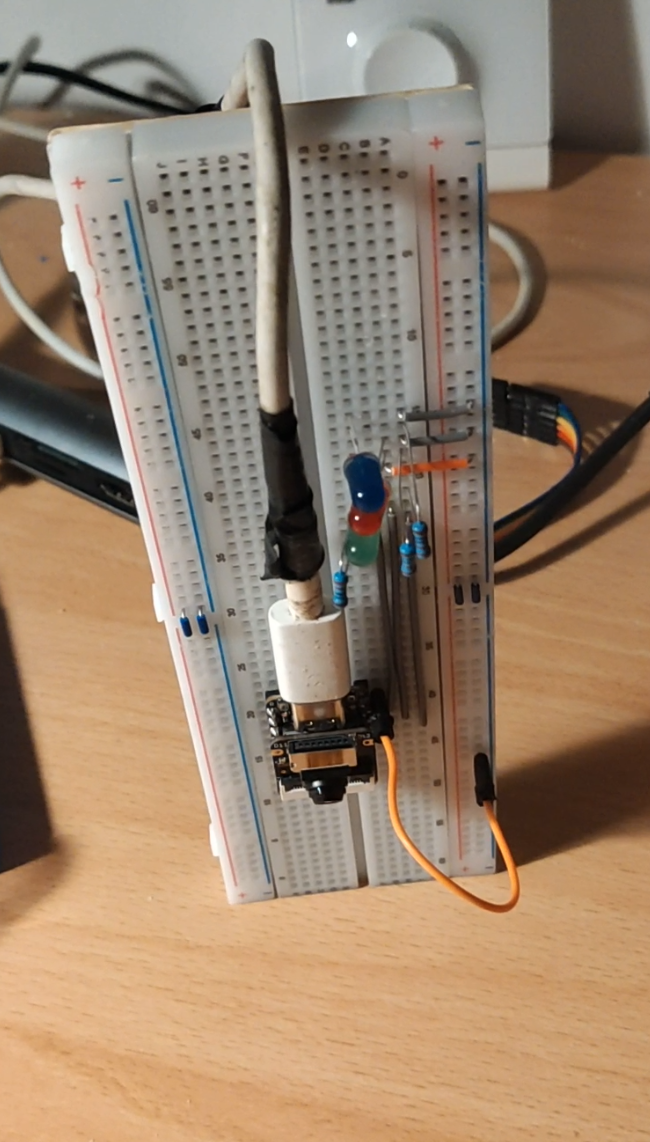

System architecture showing data flow from camera to LED outputHardware Components

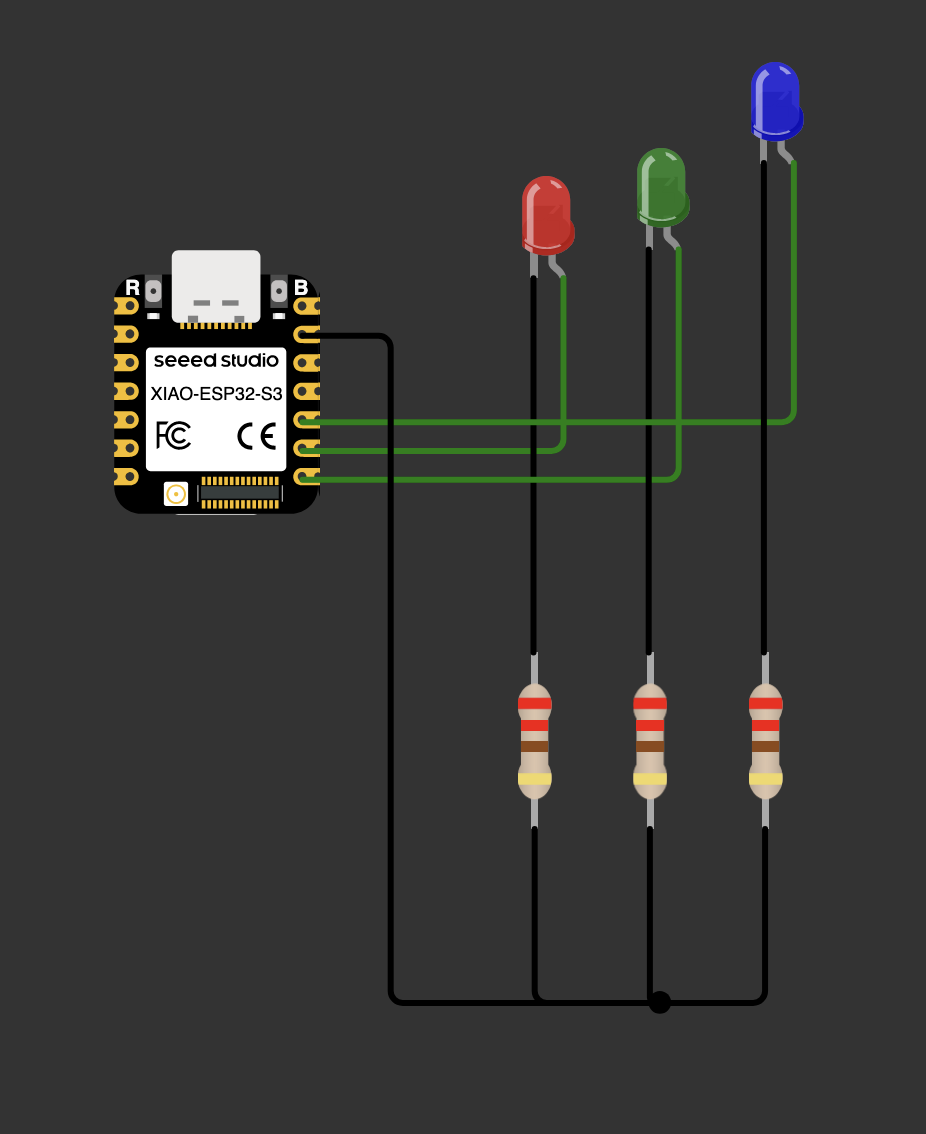

- ESP32-S3 Microcontroller (XIAO ESP32S3): The brain of the system

- OV2640 Camera Module: Captures QVGA frames (320x240 pixels)

- RGB LEDs: Connected to GPIO pins 7, 8, 9 for physical feedback

HSV Filtering in Action

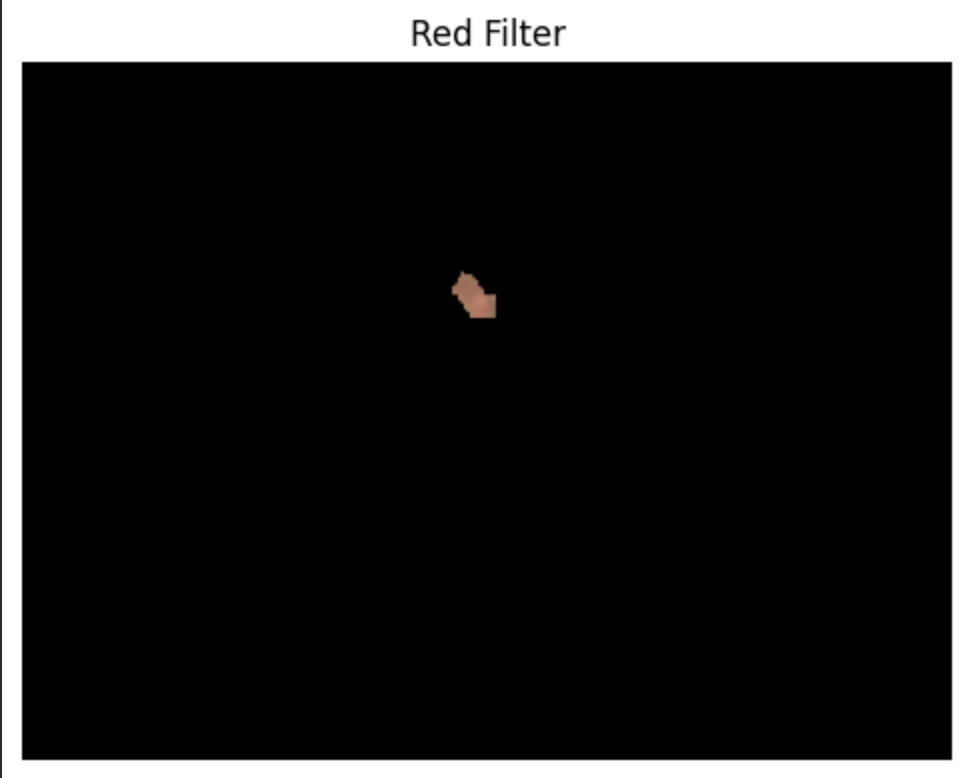

Here's how HSV filtering isolates specific LED colors from a scene. By counting the number of white pixels in each filtered image, the classifier determines if each color is present. If the count exceeds a threshold (50 pixels), that LED is classified as "detected."

Original frame

Original frame Red filter applied

Red filter appliedImplementation Details

The image processing pipeline processes each frame in four stages:

- JPEG Decoding - Convert compressed JPEG from camera to RGB888 format for pixel-level access

- RGB to HSV Conversion - Transform pixels to HSV color space where color information is separated from brightness

- HSV Filtering - Count pixels matching predefined HSV ranges for each target color (red, green, blue)

- Threshold Classification - If pixel count exceeds threshold (50 pixels), classify that LED as detected

Red LEDs required special handling due to hue wraparound (0° and 360° both represent red). The solution: check two separate HSV ranges and sum the matching pixels.

Experimentation and Tuning

Before deploying to the ESP32, I built a PC-based experimentation environment for rapid iteration:

The Development Loop

- Capture test images from the ESP32 camera

- Process offline using the experimentation harness

- Visualize results in Jupyter notebooks

- Tune HSV ranges until detection is reliable

- Deploy optimized parameters to ESP32

This approach dramatically accelerated development—no need to upload firmware for every parameter tweak.

Key Tuning Insights

- Saturation lower bounds: Too low = false positives from ambient light reflections

- Value lower bounds: Too low = shadows trigger false detections

- Hue ranges: Trade-off between specificity (narrow) and robustness (wide)

- Threshold value: Balances sensitivity vs. noise rejection

Real-Time Performance

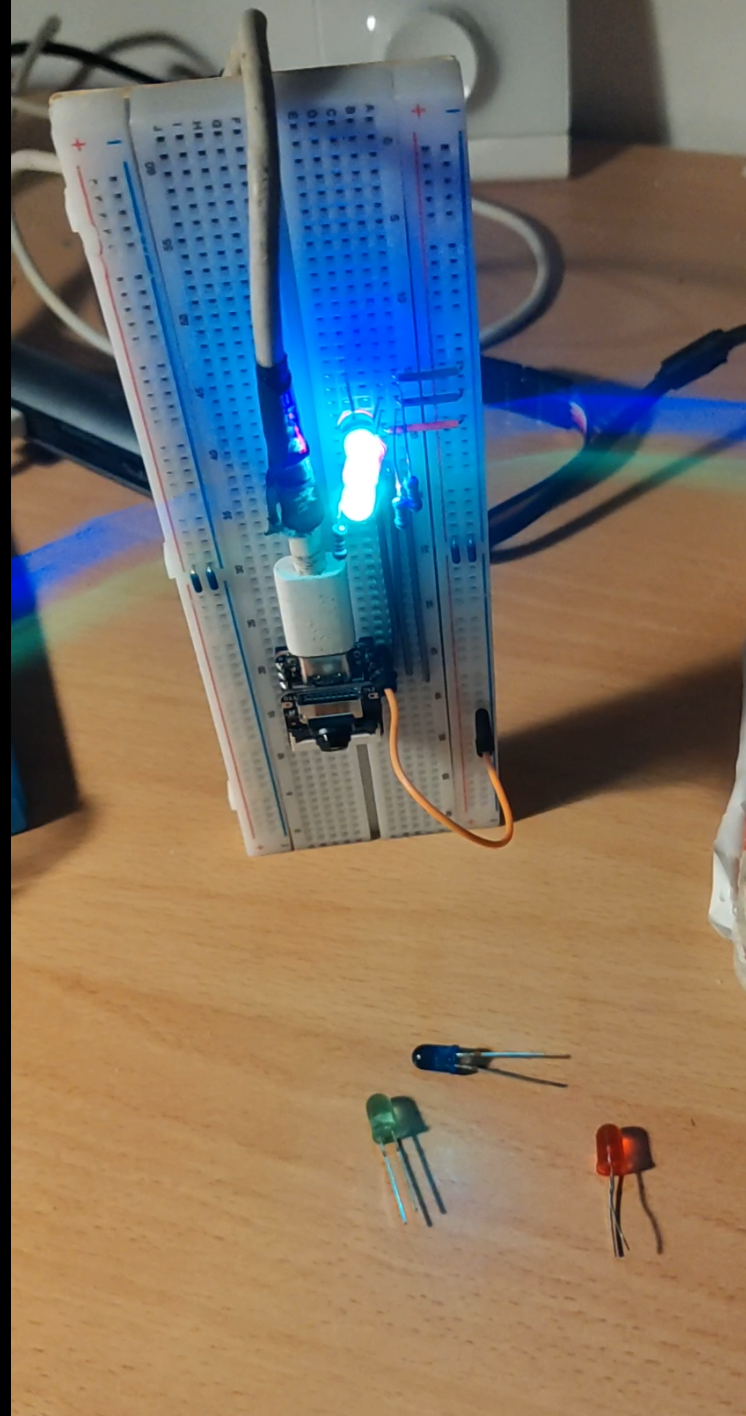

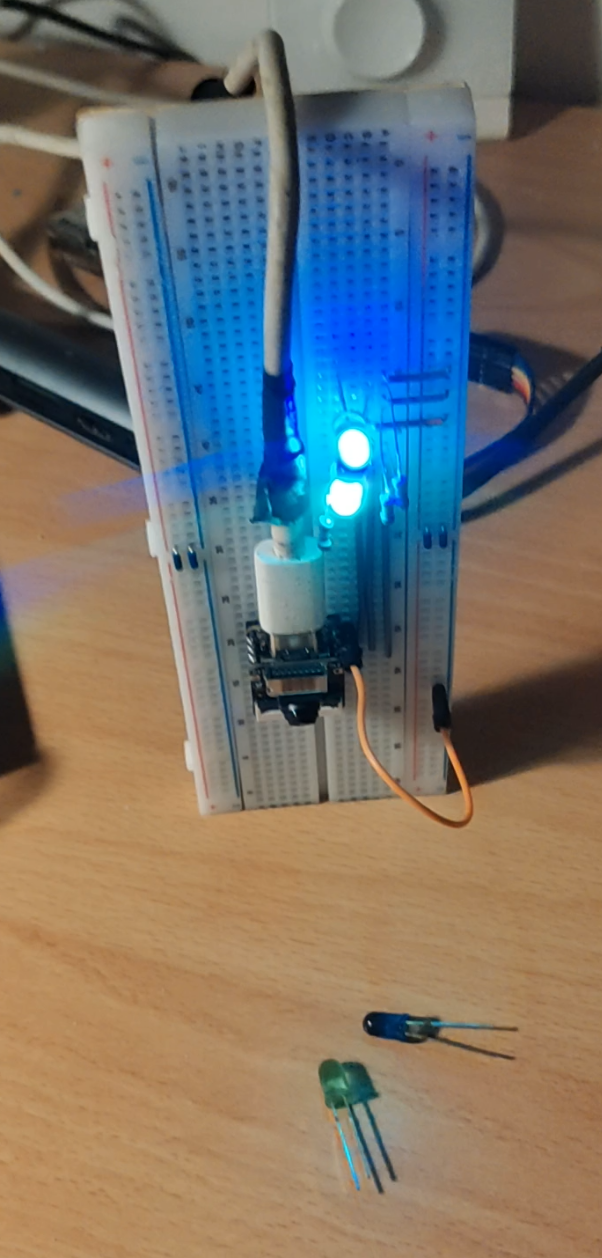

The system achieves impressive real-time performance on resource-constrained hardware, accurately detecting different LED combinations. The physical output LEDs mirror detected colors instantly, providing tactile confirmation of the classification results.

All LEDs

All LEDs  Two LEDs

Two LEDs  One LED

One LED  No LEDs

No LEDs Conclusion

This project demonstrates that sophisticated computer vision doesn't require expensive hardware or cloud connectivity. With careful algorithm selection and efficient implementation, a $10 microcontroller can perform real-time image classification entirely on-device.

Embedded computer vision is becoming increasingly accessible. Projects like this prove that powerful AI and vision capabilities can run on the smallest of devices—no cloud required.

Repository: View on GitHub